Unbounded: A Generative Infinite Game of

Character Life Simulation

Abstract

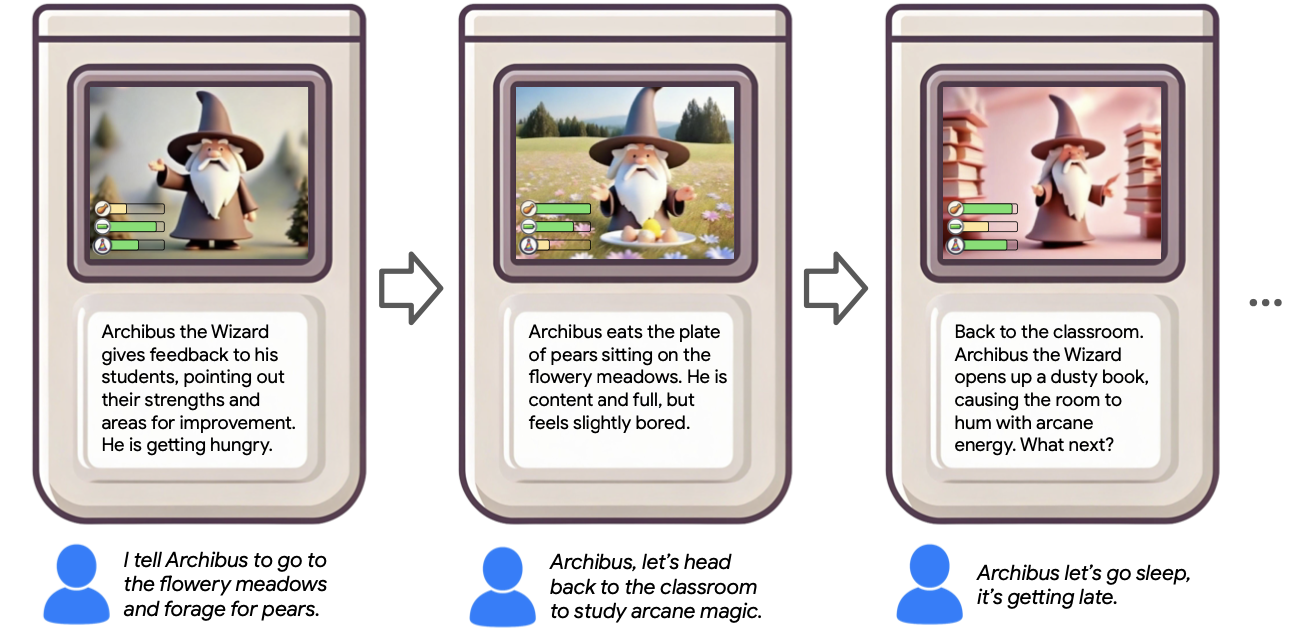

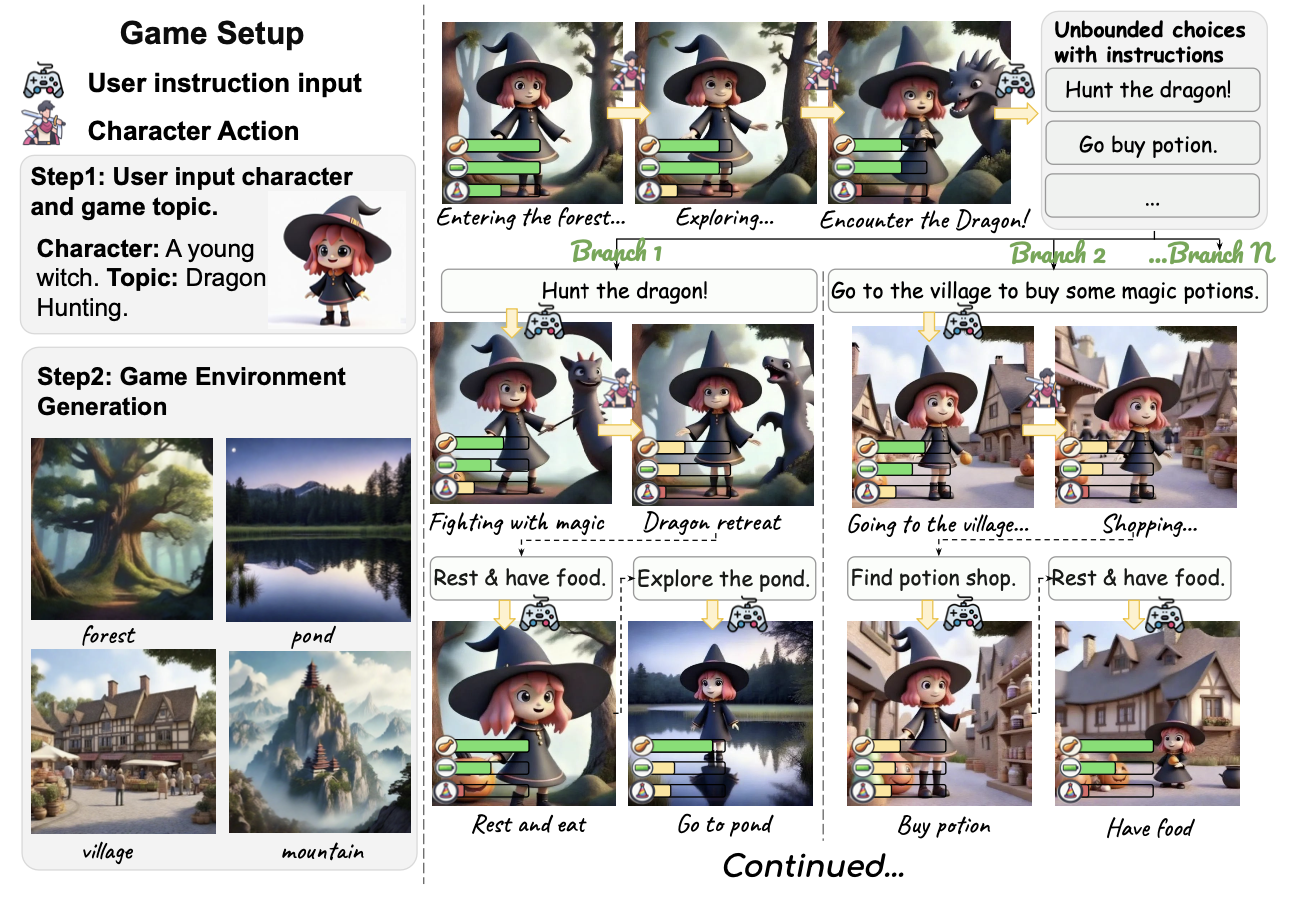

We introduce the concept of a generative infinite game, a video game that transcends the traditional boundaries of finite, hard-coded systems by using generative models. Inspired by James P. Carse's distinction between finite and infinite games, we leverage recent advances in generative AI to create Unbounded: a game of character life simulation that is fully encapsulated in generative models. Specifically, Unbounded draws inspiration from sandbox life simulations and allows you to interact with your autonomous virtual character in a virtual world by feeding, playing with and guiding it - with open-ended mechanics generated by an LLM, some of which can be emergent. In order to develop Unbounded, we propose technical innovations in both the LLM and visual generation domains. Specifically, we present: (1) a specialized, distilled large language model (LLM) that dynamically generates game mechanics, narratives, and character interactions in real-time, and (2) a new dynamic regional image prompt Adapter (IP-Adapter) for vision models that ensures consistent yet flexible visual generation of a character across multiple environments. We evaluate our system through both qualitative and quantitative analysis, showing significant improvements in character life simulation, user instruction following, narrative coherence, and visual consistency for both characters and the environments compared to traditional related approaches.

Method

Task Overview

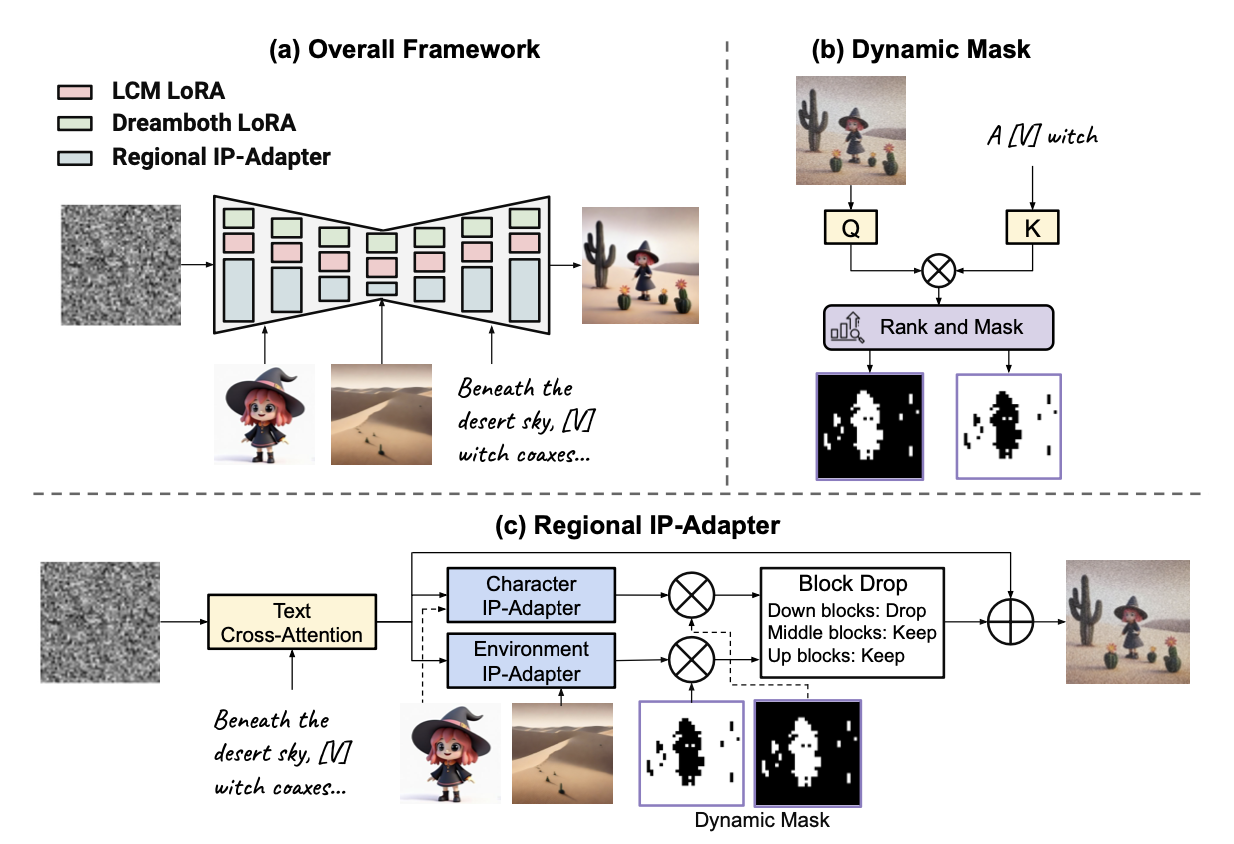

Regional IP-Adapter with Block Drop for Environment Consistency

(a) We achieve real-time image generation with LCM LoRA, maintain character consistency with DreamBooth LoRAs, and introduce a regional IP-Adapter (shown in (c)) for improved environment and character consistency.

(b) Our proposed dynamic mask genreation separating the environment and character conditioning, preventing interference between the two.

(c) Our approach introduces dual-conditioning and dynamic regional injection mechanism to represent both character and environment simultaneously in the generated images.

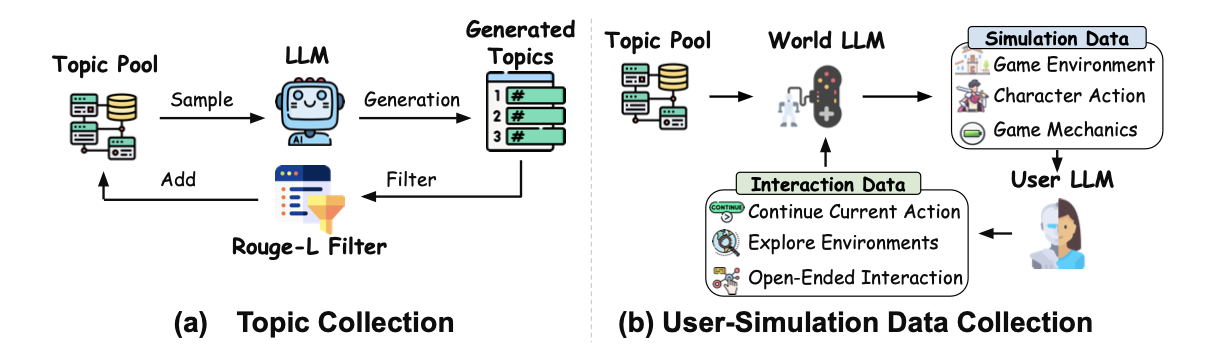

Language Model Game Engine with Open Ended Interactions and Integrated Game Mechanics

Overview of our user-simulation data collection process for LLM distillation.

(a) We begin by collecting diverse topic and character data, filtered using ROUGE-L for diversity.

(b) The World LLM and User LLM interact to generate user-simulation data through multi-round exchanges.

Results

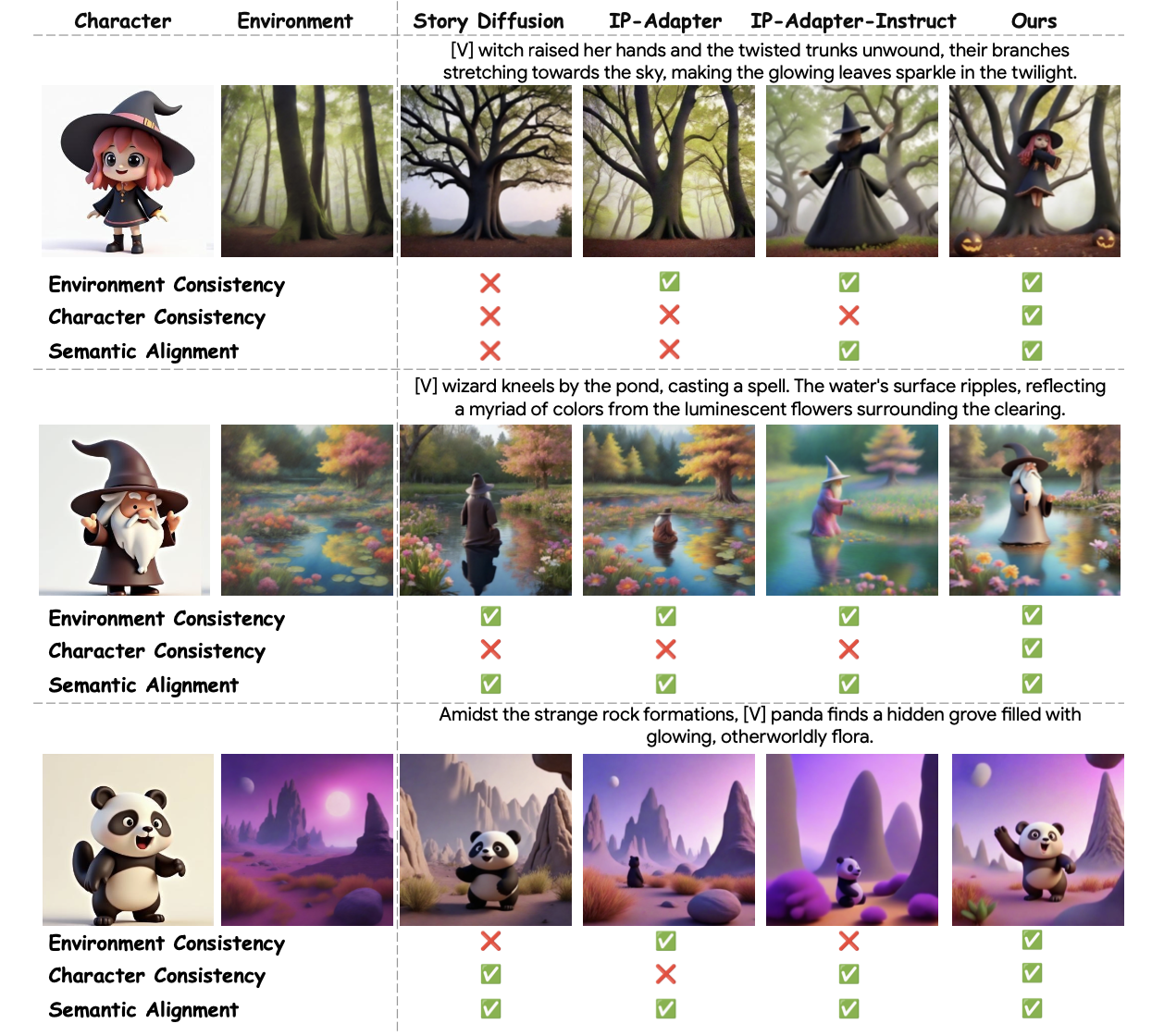

Comparison with Different Approaches for Maintaining Environment Consistency and Character Consistency

Effectiveness of Dynamic Regional IP-Adapter with Block Drop

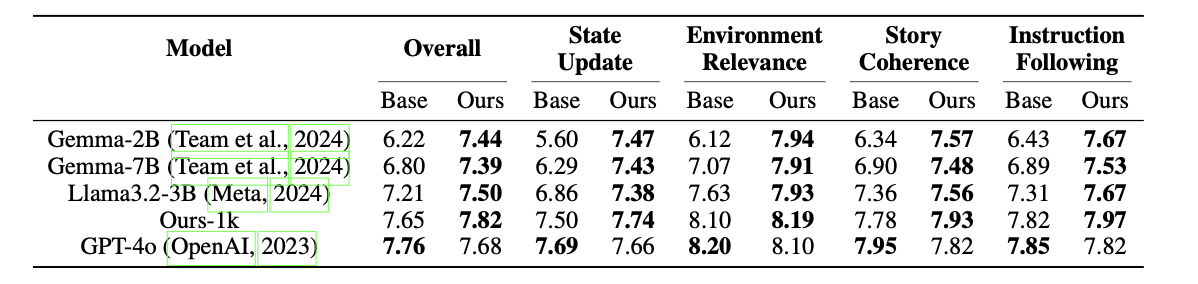

Effectiveness of Distilling Specialized Large Language Model

More Generative Game Examples

BibTeX

@article{li2024unbounded,

author = {Jialu Li and Yuanzhen Li and Neal Wadhwa and Yael Pritch and David E. Jacobs and Michael Rubinstein and Mohit Bansal and Nataniel Ruiz},

title = {Unbounded: A Generative Infinite Game of Character Life Simulation},

journal = {arxiv},

year = {2024},

url = {https://arxiv.org/abs/2410.18975}

}